Summary: When training a neural network, it's common to define a loss function that helps the model understand how far it is from the desired outcome. This loss function guides the learning process by quantifying the error between predicted and actual values.

Three years ago, researchers like Ian Goodfellow from the University of Montreal introduced the concept of Generative Adversarial Networks (GANs), which have since captured significant attention within the AI community. Since 2016, interest in GANs has grown rapidly, leading to widespread exploration and application across various fields. Recently, Google released TFGAN, an open-source lightweight library aimed at simplifying the training and evaluation of GANs.

In traditional neural networks, a loss function is essential to measure performance. For instance, in image classification models, a loss function penalizes incorrect predictions. If a model misclassifies a dog as a cat, the loss value increases, signaling the need for correction during training.

However, not all problems can be easily addressed with conventional loss functions—especially those involving human perception, such as image compression or text-to-speech systems. These tasks often require more nuanced measures that go beyond simple numerical errors.

GANs have revolutionized machine learning, enabling breakthroughs in areas like text-to-image generation, super-resolution, and robotic control. Despite their success, they also introduce new challenges in both theory and implementation, making it difficult to keep up with the fast-paced research developments.

To make GAN-based experiments more accessible, Google developed TFGAN—a lightweight library designed to streamline the training and evaluation of GANs. It provides a solid infrastructure, well-tested loss functions, and evaluation metrics, along with easy-to-use examples that demonstrate its flexibility and power.

Additionally, Google released a tutorial featuring a high-level API that allows users to quickly train models using their own data. This makes it easier for developers and researchers to experiment with GANs without getting bogged down by complex code.

The image above illustrates the impact of adversarial loss on image compression. The top row shows an image patch from the ImageNet dataset. The middle row displays the result of compressing and decompressing an image using a traditional loss function. The bottom row shows the output when adversarial loss is used during training. Although the GAN-trained image may differ slightly from the original, it appears sharper and contains more detail compared to other methods.

TFGAN supports a wide range of experiments by offering a simple function call that covers most GAN use cases. You can train your model with your own data in just a few lines of code. Its modular design also allows for more specialized GAN architectures to be implemented easily.

You can choose which modules to use—such as loss functions, evaluation metrics, features, or training procedures—each of which is independent and reusable. The lightweight nature of TFGAN ensures compatibility with other frameworks or native TensorFlow code, giving you greater flexibility.

Models built with TFGAN can benefit from future improvements in infrastructure, and you can leverage a wide range of pre-implemented losses and metrics without having to rewrite them from scratch.

Moreover, the codebase is well tested, reducing the risk of common issues like numerical instability or statistical errors that often occur when working with GAN libraries.

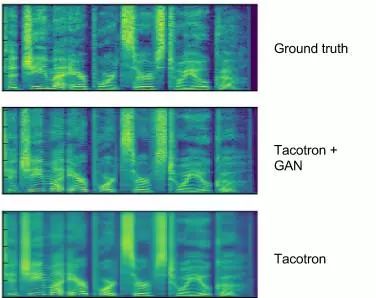

As shown in the image, traditional text-to-speech (TTS) systems often produce spectrograms that are overly smooth. By integrating GANs into the Tacotron TTS system, more realistic textures can be generated, resulting in audio outputs with fewer artifacts and improved naturalness.

By open-sourcing TFGAN, Google enables a broader audience to access the same tools used by its own researchers. This means anyone can take advantage of the latest advancements and improvements made within the library, fostering innovation and collaboration in the GAN space.

heat shrinkable cap

heat shrinkable cap,Heat-shrink tube,Heat shrinkable tubing,thermal contraction pipe,Shrink tube

Mianyang Dongyao New Material Co. , https://www.mydyxc.com